For l classes C 1, ..., C l , given a vector X= (x 1, …, x n ) of class labels computed at the prediction stage of the classification algorithm and a vector Y= (y 1, …, y n ) of expected class labels, the problem is to evaluate the classifier by computing the confusion matrix and connected quality metrics: precision, error rate, and so on.

Further definitions use the following notations:

tp i (true positive) |

the number of correctly recognized observations for class C i |

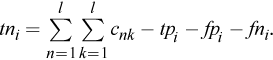

tn i (true negative) |

the number of correctly recognized observations that do not belong to the class C i |

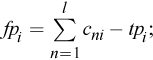

fp i (false positive) |

the number of observations that were incorrectly assigned to the class C i |

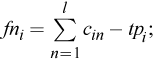

fn i (false negative) |

the number of observations that were not recognized as belonging to the class C i |

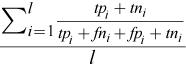

The library uses the following quality metrics for multi-class classifiers:

|

Quality Metric |

Definition |

|---|---|

|

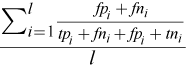

Average accuracy |

|

|

Error rate |

|

|

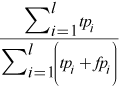

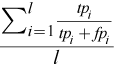

Micro precision (Precision μ ) |

|

|

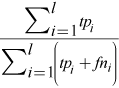

Micro recall (Recall μ ) |

|

|

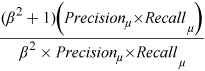

Micro F-score (F-score μ ) |

|

|

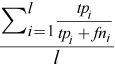

Macro precision (Precision M) |

|

|

Macro recall (Recall M) |

|

|

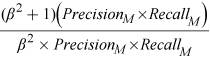

Macro F-score (F-score M) |

|

For more details of these metrics, including the evaluation focus, refer to [Sokolova09].

The following is the confusion matrix:

|

Classified as Class C 1 |

... |

Classified as Class C i |

... |

Classified as Class C l |

|

|

Actual Class C 1 |

c 11 |

... |

c 1i |

... |

c 1l |

|

... |

... |

... |

... |

... |

... |

|

Actual Class C i |

c i1 |

... |

c ii |

... |

c il |

|

... |

... |

... |

... |

... |

... |

|

Actual Class C l |

c l1 |

... |

c li |

... |

c ll |

The positives and negatives are defined through elements of the confusion matrix as follows: