Given n feature vectors x 1=(x 11,…,x 1p ),..., x n =(x n1,…,x np ) of size p, a vector of class labels y=(y 1,…,y n ), where y i ∈ K = {-1, 1} describes the class to which the feature vector x i belongs, and a weak learner algorithm, the problem is to build a two-class BrownBoost classifier.

Training Stage

The model is trained using the Freund method [Freund01] as follows:

-

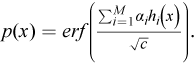

Calculate c = erfinv 2(1 - ε), where

erfinv(x) is an inverse error function,

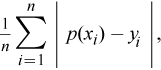

ε is a target classification error of the algorithm defined as

erf(x) is the error function,

h i (x) is a hypothesis formulated by the i-th weak learner, i = 1,...,M,

α i is the weight of the hypothesis.

-

Set initial prediction values: r 1(x, y) = 0.

-

Set "remaining timing": s 1 = c.

-

Do for i=1,2,... until s i+1 ≤ 0

-

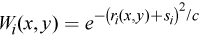

With each feature vector and its label of positive weight, associate

-

Call the weak learner with the distribution defined by normalizing W i (x, y) to receive a hypothesis h i (x)

-

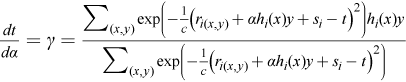

Solve the differential equation

with given boundary conditions t = 0 and α = 0 to find t i = t* > 0 and α i = α* such that either γ ≤ ν or t* = s i , where ν is a given small constant needed to avoid degenerate cases

-

Update the prediction values: r i+1(x, y) = r i (x, y)+ α i h i (x)y

- Update "remaining time": s i+1 = s i - t i

End do

-

The result of the model training is the array of M weak learners h i .

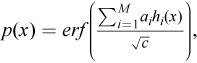

Prediction Stage

Given the BrownBoost classifier and r feature vectors x 1,…,x r , the problem is to calculate the final classification confidence, a number from the interval [-1, 1], using the rule