The distributed processing mode assumes that the data set is split in nblocks blocks across computation nodes.

Algorithm Parameters

At the training stage, implicit ALS recommender in the distributed processing mode has the following parameters:

|

Parameter |

Default Value |

Description |

|

|---|---|---|---|

|

computeStep |

Not applicable |

The parameter required to initialize the algorithm. Can be:

|

|

|

algorithmFPType |

double |

The floating-point type that the algorithm uses for intermediate computations. Can be float or double. |

|

|

method |

fastCSR |

Performance-oriented computation method for CSR numeric tables, the only method supported by the algorithm. |

|

|

nFactors |

10 |

The total number of factors. |

|

|

maxIterations |

5 |

The number of iterations. |

|

|

alpha |

40 |

The rate of confidence. |

|

|

lambda |

0.01 |

The parameter of the regularization. |

|

|

preferenceThreshold |

0 |

Threshold used to define preference values. 0 is the only threshold supported so far. |

|

Computation Process

At each iteration, the implicit ALS training algorithm alternates between re-computing user factors (X) and item factors (Y). These computations split each iteration into the following parts:

-

Re-compute all user factors using the input data sets and item factors computed previously.

-

Re-compute all item factors using input data sets in the transposed format and item factors computed previously.

Important

In Intel DAAL, computation of the implicit ALS in the distributed processing mode requires that you provide the matrices X and Y and their transposed counterparts.

Each part includes four steps executed either on local nodes or on the master node, as explained below and illustrated by graphics for nblocks=3. The main loop of the implicit ALS training stage is executed on the master node. The following pseudocode illustrates the entire computation:

for (iteration = 0; iteration < maxIterations; iteration++)

{

/* Update partial user factors */

computeStep1Local(itemsPartialResultLocal);

computeStep2Master();

computeStep3Local(itemsOffsetTable, itemsPartialResultLocal, itemsFactorsToNodes);

usersPartialResultLocal = computeStep4Local(itemsPartialResultLocal);

/* Update partial item factors */

computeStep1Local(usersPartialResultLocal);

computeStep2Master();

computeStep3Local(usersOffsetTable, usersPartialResultLocal, usersFactorsToNodes);

itemsPartialResultLocal = computeStep4Local(usersPartialResultLocal);

}

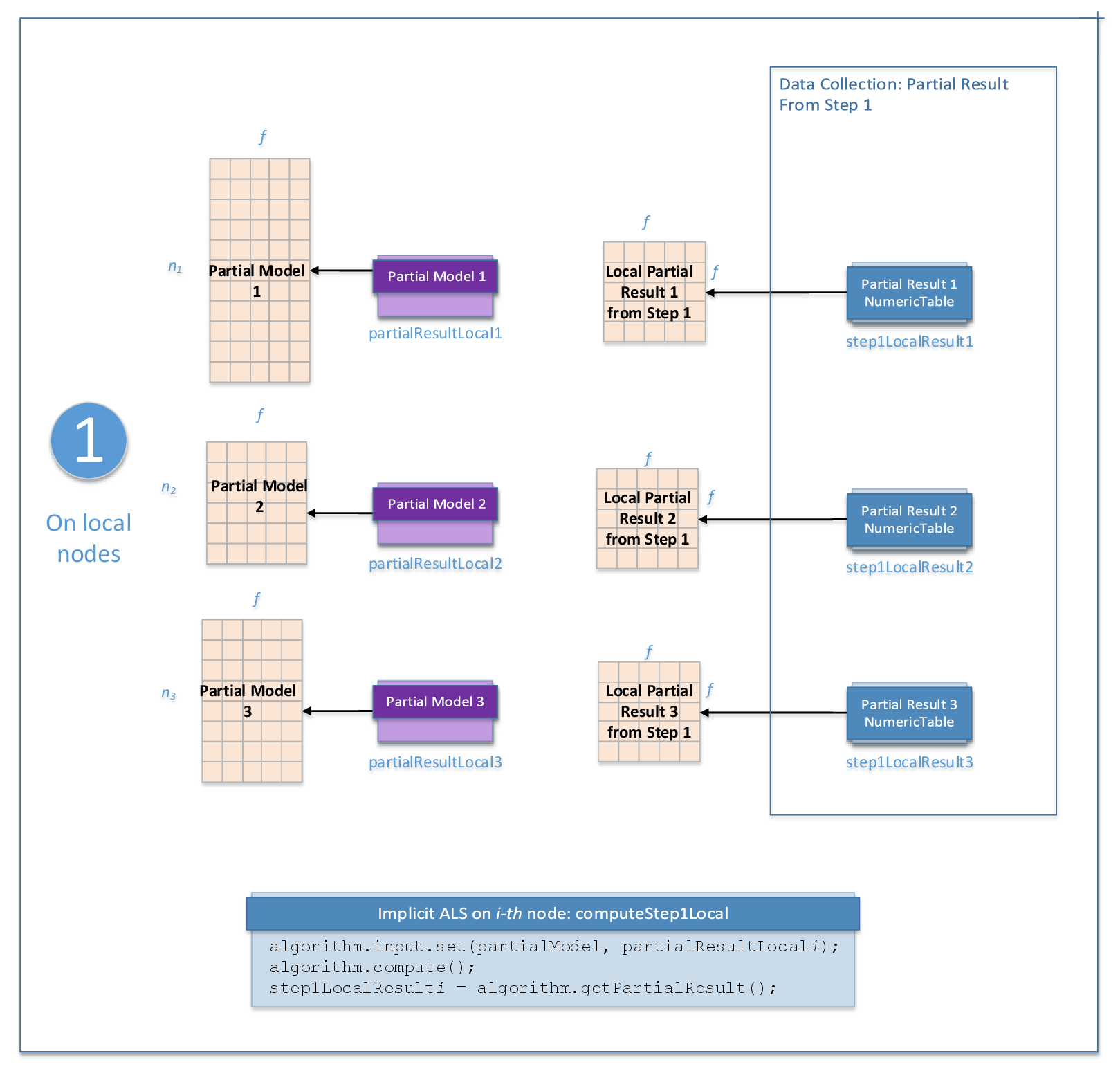

Step 1 - on Local Nodes

This step works with the matrix:

Parts of this matrix are used as input partial models.

In this step, implicit ALS recommender training accepts the input described below. Pass the Input ID as a parameter to the methods that provide input for your algorithm. For more details, see Algorithms.

|

Input ID |

Input |

|

|---|---|---|

|

partialModel |

Partial model computed on the local node. |

|

In this step, implicit ALS recommender training calculates the result described below. Pass the Result ID as a parameter to the methods that access the results of your algorithm. For more details, see Algorithms.

|

Result ID |

Result |

|

|---|---|---|

|

outputOfStep1ForStep2 |

Pointer to the f x f numeric table with the sum of numeric tables calculated in Step 1. |

|

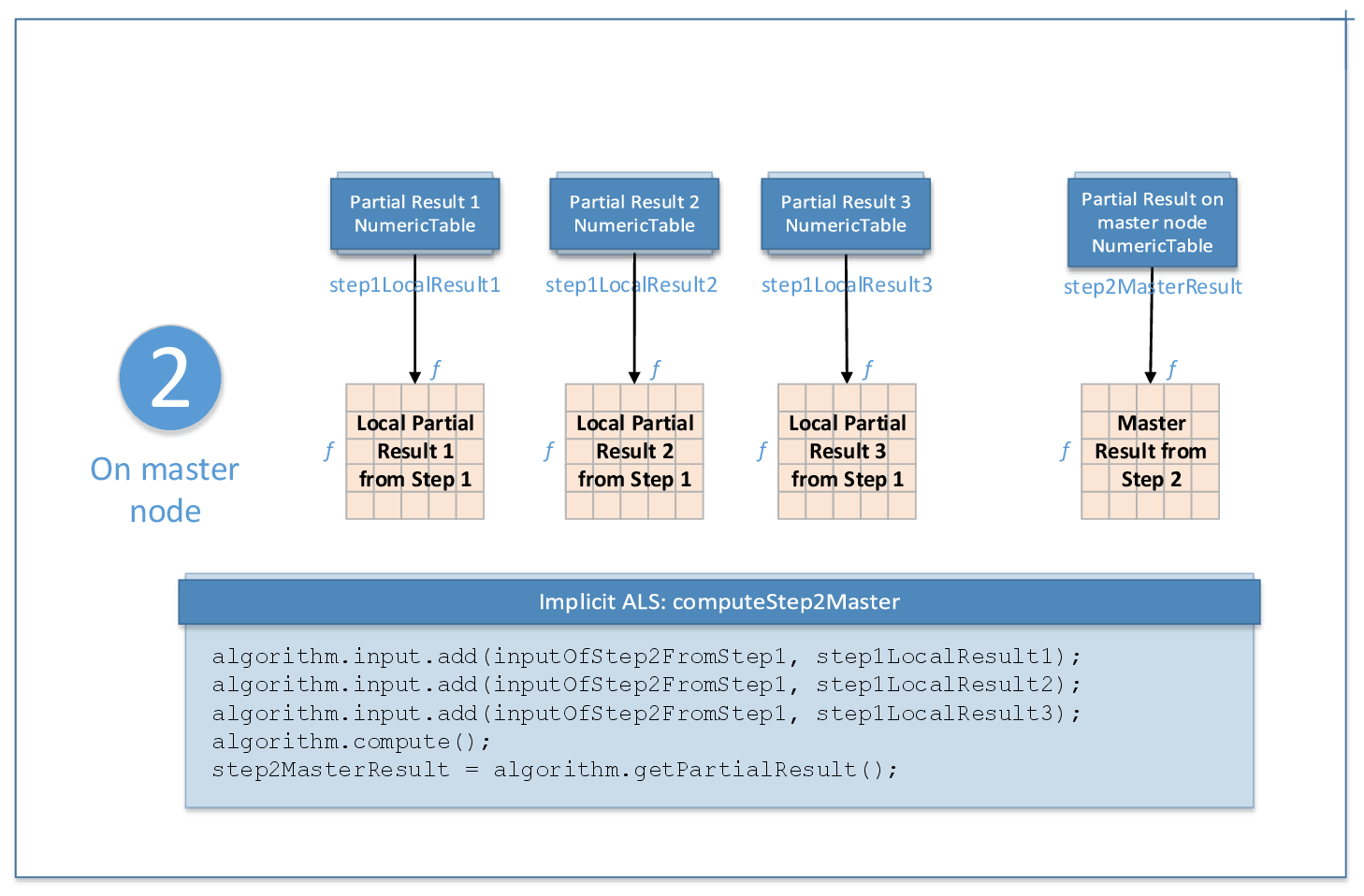

Step 2 - on Master Node

This step uses local partial results from Step 1 as input.

In this step, implicit ALS recommender training accepts the input described below. Pass the Input ID as a parameter to the methods that provide input for your algorithm. For more details, see Algorithms.

|

Input ID |

Input |

|

|---|---|---|

|

inputOfStep2FromStep1 |

A collection of numeric tables computed on local nodes in Step 1. The collection may contain objects of any class derived from NumericTable except the PackedTriangularMatrix class with the lowerPackedTriangularMatrix layout. |

|

In this step, implicit ALS recommender training calculates the result described below. Pass the Result ID as a parameter to the methods that access the results of your algorithm. For more details, see Algorithms.

|

Result ID |

Result |

|

|---|---|---|

|

outputOfStep2ForStep4 |

Pointer to the f x f numeric table with merged cross-products. |

|

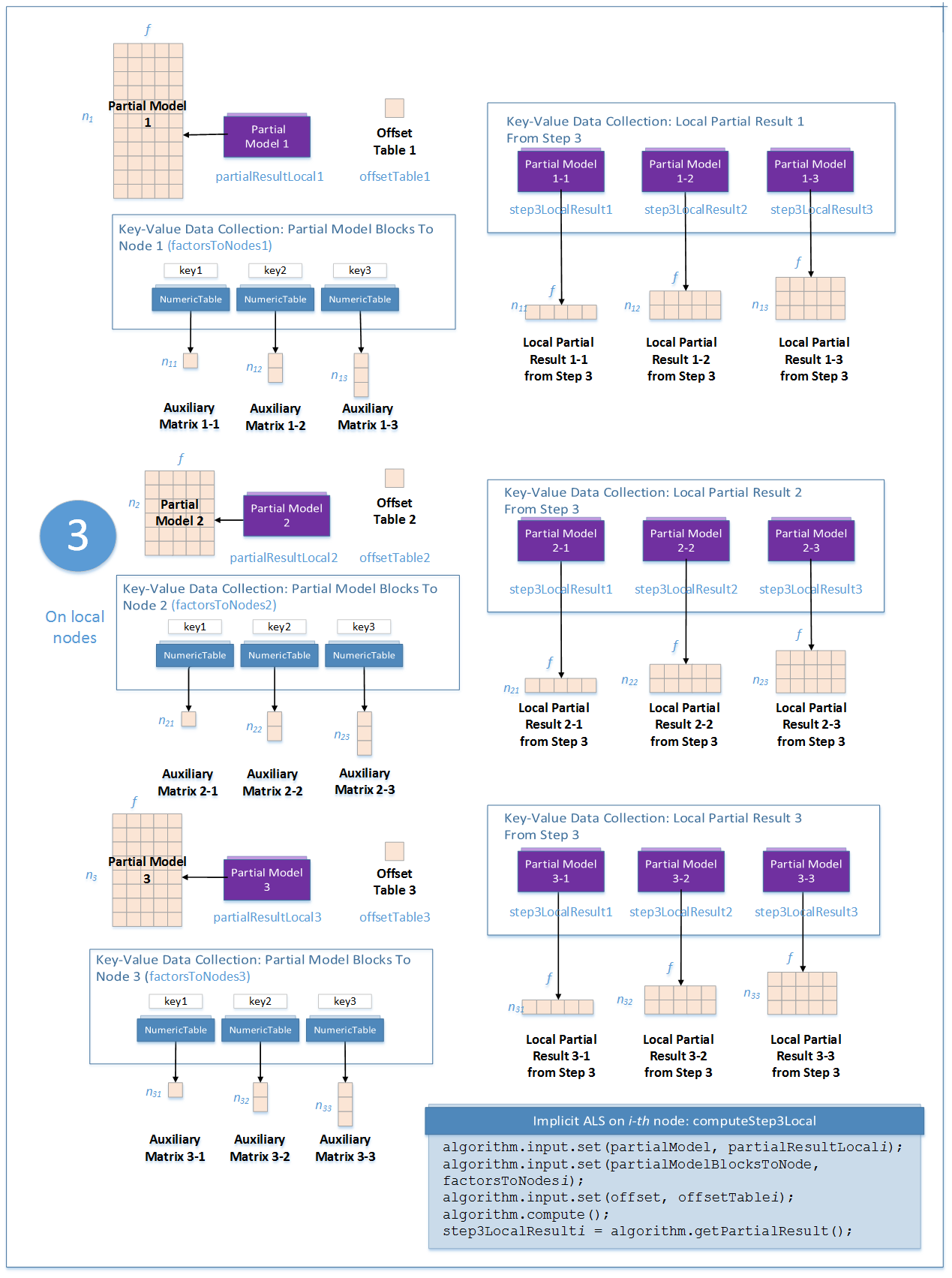

Step 3 - on Local Nodes

On each node i, this step uses results of the previous steps and requires that you provide two extra matrices Offset Table i and Partial Model Blocks To Node i.

The only element of the Offset Table i table refers to the matrix:

The Offset Table i table must hold the index in the matrix row of the first element in the partial model obtained in Step 1. Fill this table with the index of the starting row of the part of the input data set located on the i-th node.

The Partial Model Blocks To Node i table contains a key-value data collection that specifies the node where a subset of the partial model must to be transferred. For example, if key[i] (such as key1 in the diagram) contains indices {j1, j2, j3}, rows j1, j2, and j3 of the partial model must be transferred to the computation node i.

In this step, implicit ALS recommender training accepts the input described below. Pass the Input ID as a parameter to the methods that provide input for your algorithm. For more details, see Algorithms.

|

Input ID |

Input |

|

|---|---|---|

|

partialModel |

Partial model computed on the local node. |

|

|

partialModelBlocksToNode |

A key-value data collection that maps components of the partial model to local nodes: i-th element of this collection is a numeric table that contains indices of the factors to be transferred to the i-th node. |

|

|

offset |

Pointer to 1x1 numeric table that holds the global index of the starting row of the input partial model. |

|

In this step, implicit ALS recommender training calculates the result described below. Pass the Result ID as a parameter to the methods that access the results of your algorithm. For more details, see Algorithms.

|

Result ID |

Result |

|

|---|---|---|

|

outputOfStep3ForStep4 |

A key-value data collection that contains partial models to be used in Step 4. Each element of the collection contains an object of the PartialModel class. |

|

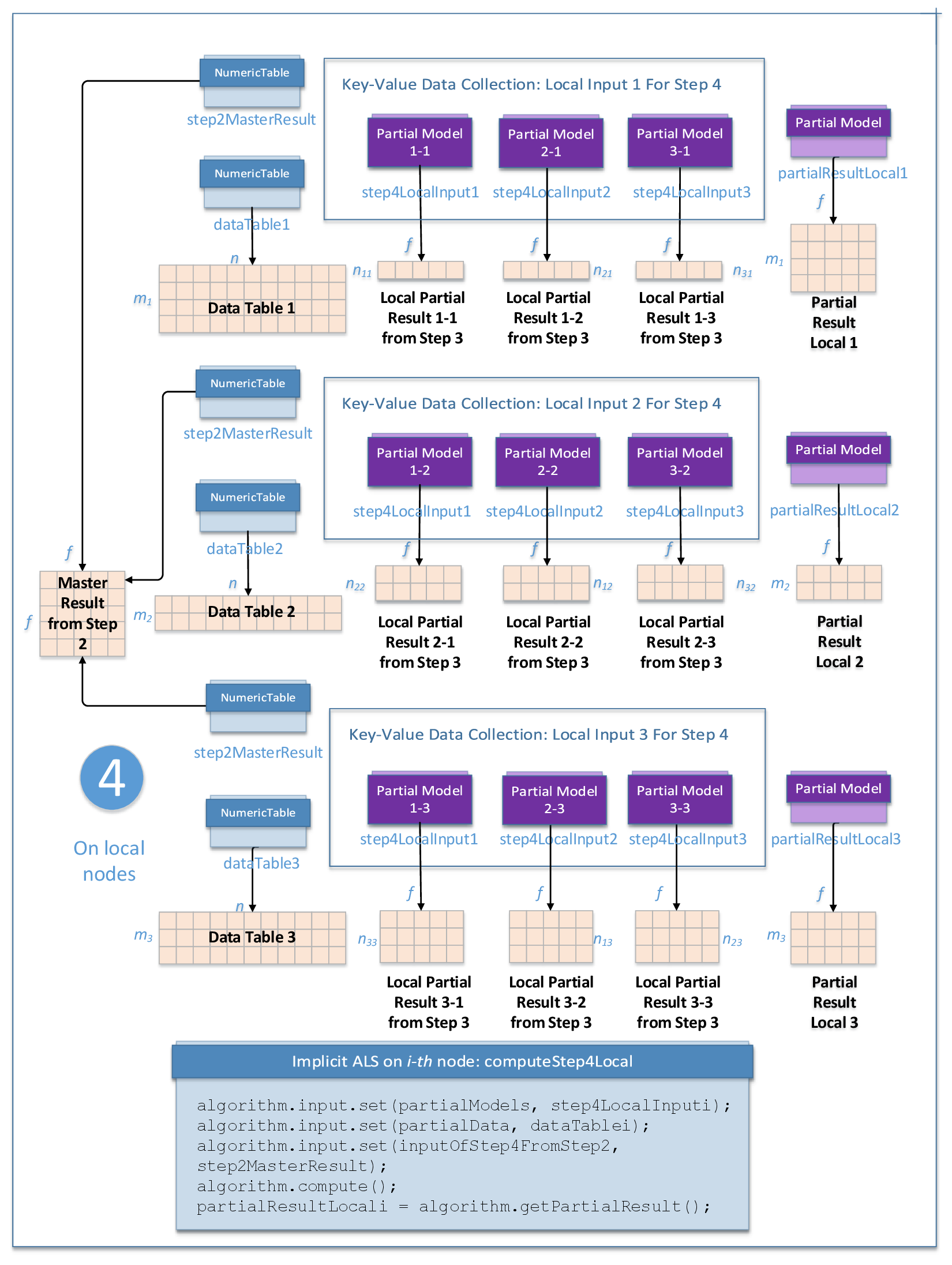

Step4 - on Local Nodes

This step uses the results of the previous steps and parts of the following matrix in the transposed format:

The results of the step are the re-computed parts of this matrix.

In this step, implicit ALS recommender training accepts the input described below. Pass the Input ID as a parameter to the methods that provide input for your algorithm. For more details, see Algorithms.

|

Input ID |

Input |

|

|---|---|---|

|

partialModels |

A key-value data collection with partial models that contain user factors/item factors computed in Step 3. Each element of the collection contains an object of the PartialModel class. |

|

|

partialData |

Pointer to the CSR numeric table that holds the i-th part of ratings data, assuming that the data is divided by users/items. |

|

|

inputOfStep4FromStep2 |

Pointer to the f x f numeric table computed in Step 2. |

|

In this step, implicit ALS recommender training calculates the result described below. Pass the Result ID as a parameter to the methods that access the results of your algorithm. For more details, see Algorithms.

|

Result ID |

Result |

|

|---|---|---|

|

outputOfStep4ForStep1 |

Pointer to the partial implicit ALS model that corresponds to the i-th data block. The partial model stores user factors/item factors. |

|

|

outputOfStep4ForStep3 |

||